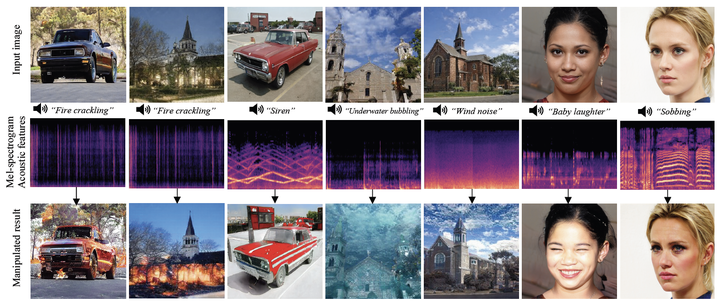

Image credit: Lee et al., NeurIPS Workshop 2021

Image credit: Lee et al., NeurIPS Workshop 2021

Abstract

Semantically meaningful image manipulation often involves laborious manual human examination for each desired manipulation. Recent success suggests that leveraging the representation power of existing Contrastive Language-Image Pretraining (CLIP) models with the generative power of StyleGAN can successfully manipulate a given image driven by textual semantics. Following this, we explore adding a new modality, Sound, which can convey a different view of dynamic semantic information and thus can reinforce control strength over the semantic image manipulation. Our audio encoder is trained to produce a latent representation from an audio input, which is forced to be aligned with image and text representations in the same CLIP embedding space. Given such aligned embeddings, we use a direct latent optimization method so that an input image is modified in response to a user-provided sound input. We quantitatively and qualitatively demonstrate the effectiveness of our approach, and we observe our sound-guided image manipulation approach can produce semantically meaningful images.